17 May is World Telecommunication and Information Society Day. The date marks the anniversary of the signing of the first International Telegraph Convention and the creation of the International Telecommunication Union in 1865.

The theme for 2018 is the potential for artificial intelligence. We’re all so used to hearing electronic devices speak to us these days that it seems commonplace for machines to talk. The developments of voice-on-a-chip technologies trace their origins back (in part) to a speech synthesis computer on display in the National Museum of Scotland’s Communicate gallery.

Machines that speak

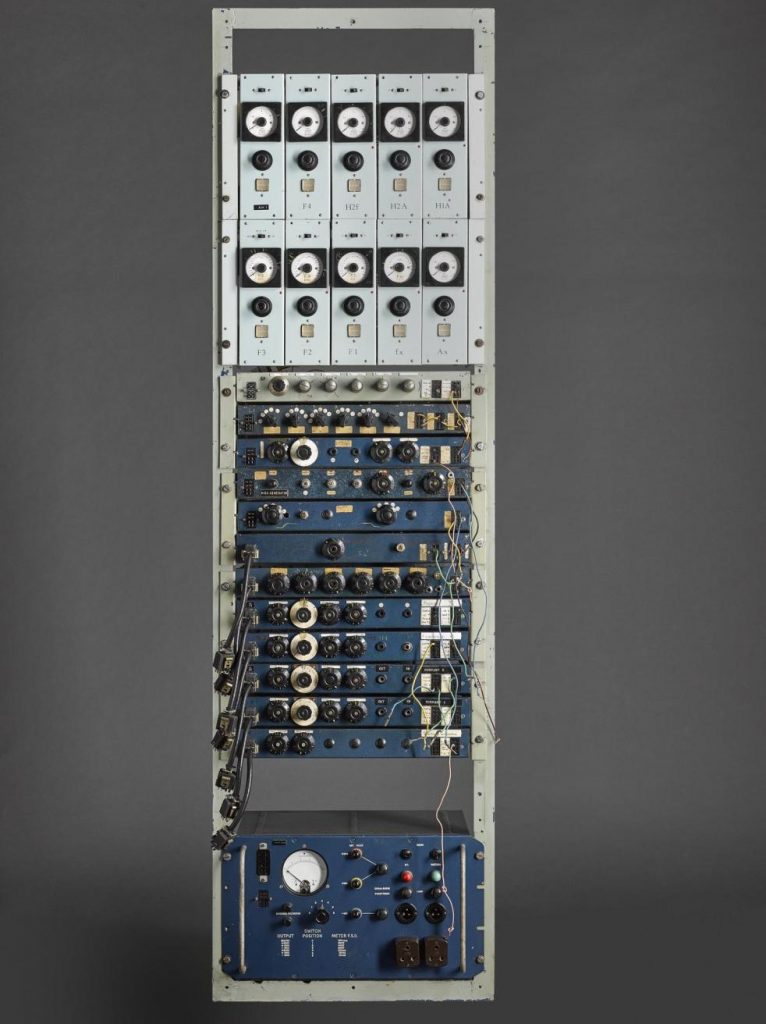

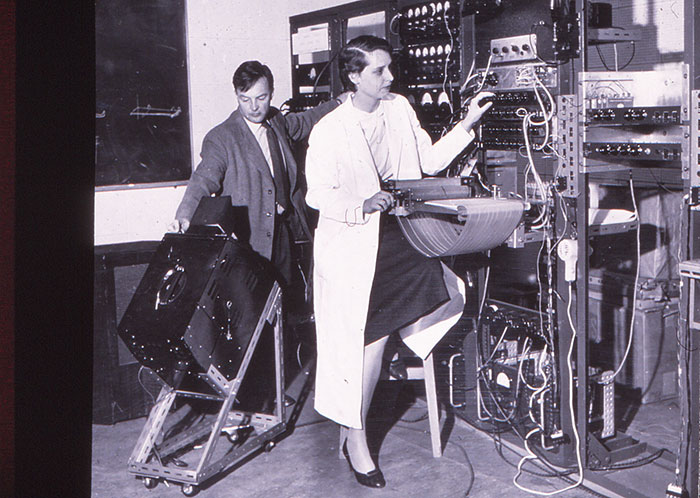

One of the first computers to be programmed to generate human sounds artificially, rather than use recorded speech, was the University of Edinburgh’s Parametric Artificial Talker – better known as PAT. PAT links the earlier mechanical speaking machines and the synthesiser on a chip.

The BBC broadcast a documentary series in 1958 entitled Eye on Research. It featured cutting edge university research projects across the UK. One of these programmes was called ‘The Six Parameters of PAT‘. It opens by saying:

“Up in Edinburgh the scientists have, amongst their apparatus, what one can only describe as a talking machine…..”

For those of us using satnavs every day, or finding out about the weather by asking Echo or Alexa, it is hard to appreciate the wonder behind the Daily Telegraph and Morning Post headline of 30 November 1959, “Teaching a Machine to Talk Back”:

“It is not acting like a gramophone, a radio, a tape-recorder or a telephone. It is literally a talking machine, and it is producing, not reproducing, words.”

The first version of PAT was developed at the Signals Research and Development Establishment in 1952 to explore ways to reduce bandwidth, for use on submarine telephone cables, particularly the soon to be laid Transatlantic Telephone cable, better known as TAT 1, from Oban on the West coast of Scotland to Clarenville in Newfoundland, Canada.

TAT 1

The TAT 1 route consisted of two cables – one for each direction of transmission – with repeaters spliced in at 60km intervals to boost the signal the 3620km. The cable ship actually laid nearer 3900km of cable – the extra was needed because of the peaks and troughs along the sea floor, at some points nearly 5km below the surface of the ocean. The cables provided 35 telephone circuits, that is 35 simultaneous telephone calls across the Atlantic (plus one additional one to send telegraph messages).

It was an engineering feat in its own right, taking years of research to develop, since all the components, particularly the repeaters, had to be robust enough to lie on the seabed and last at least twenty years without maintenance.

It was the world’s first long distance sub-sea telephone cable and followed similar routes to the pioneering transatlantic telegraph cables of nearly a hundred years earlier.

The subsea Transatlantic Telephone service was opened on 25 September 1956. That evening a concert from Carnegie Hall in New York was transmitted to London via the cable and broadcast by the BBC.

In its first year, those two cables carried nearly 300,000 calls to and from the US and Canada at a cost of £3 for three minutes. This might seem expensive now but it was a vast improvement on the alternative; £9 for three minutes for an unreliable radio link.

Crucial to world politics in the 1950s, given the US-Soviet tensions at the time, TAT-1 carried the Moscow-Washington hotline between the American and Soviet heads of state.

TAT1 was just the start of a revolution in global cable communications. It was retired in 1978.

60 years on, the latest generation of fibre optic cables carry hundreds of millions of calls, emails, voice over internet calls and computer data.

So how did this relate to the sounds being created on PAT in Edinburgh?

As one of the scientists working on PAT said in the BBC documentary:

“It seemed to me we could make much better use of these cables if we abandoned sending signals which were faithful copies of the intricate soundwave patterns of speech and sent instead signals that described a simpler speech pattern but which could still be understood.”

The Armed Forces were also interested in the work on artificially generated speech for battlefield communication, so the original PAT research was funded by the Ministry of Supply, who financed Army research projects.

Studying speech

It was this interest in simplifying speech to understandable patterns which was of interest to the University’s Professor of Phonetics, David Abercrombie, who realised its potential for the study of phonetics (the study of speech sounds). The parts of PAT in the Museum collections were built at the University of Edinburgh in 1956. It was among the first machines anywhere to synthesise convincing continuous speech under electronic control. This research on speech synthesis helped people understand the mechanism and psychology of speech. It was the beginning in giving computer applications a voice.

Speech consists of two basic types of sound: that caused by the vibration of the vocal cords in the larynx at the base of the throat and that caused by air passing through a narrow opening and creating a hissing or breathy sound. PAT produced an electronic larynx tone and a hissing sound, varied by loudness of both tone and hiss. The Guardian‘s article on PAT in 1959 described the process:

“PAT creates speech by generating pulses corresponding to the sounds made by the vocal cords. These pulses are modified by electrical circuits to simulate the action of the human vocal apparatus.”

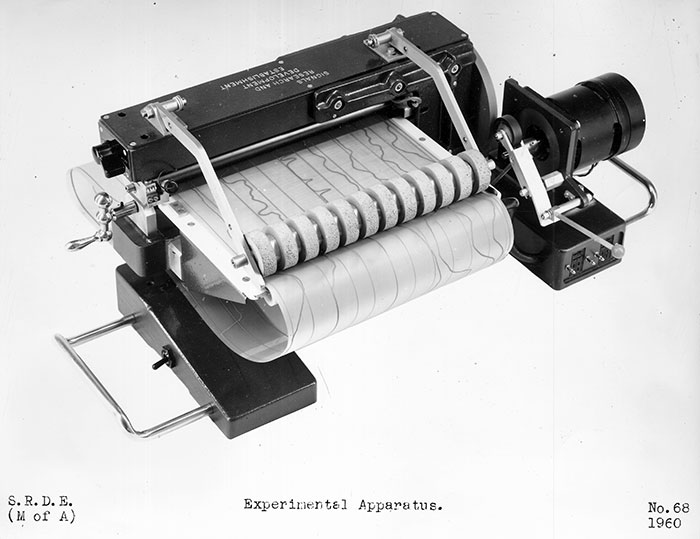

Speech parameters in the form of tracks drawn in conductive ink on plastic sheets, and later on glass slides, were fed by operators into a mechanism which translated the tracks into varying voltage signals which controlled the sound-producing generators of PAT. Initially, the tracks were taken from tracings of human speech patterns produced on a spectrogram. Through years of experimentation with these tracings from human speech, the operators discovered which core speech elements, or variables, or parameters, were vital for producing specific sounds – the ‘Six Parameters’ of the documentary title.

These are: Fundamental pitch, Loudness, Hiss, and the Pitch of each of the three lowest vowel resonances made by the different positions of the tongue, the jaw and the lips.

The hardest to recreate artificially were the hiss sounds – the difference between ‘s’ and ‘sh’. At the time of filming in 1958, the researchers had recreated nearly all human language sounds, but a couple still evaded them. As they say in the BBC documentary:

“PAT talks a very large number of languages very nicely. However, there are still some things PAT can’t manage. Some of the notorious difficulties in languages such as the Chinese tones and things like the sound “rrrrrrr”, which PAT will have to do if she wants to talk certain types of Scots, as she ought to be able to do, she has never been able to do that at all so far.”

Using synthetic speech

Research with synthetic speech had less direct impact on telephone cable bandwidth than anticipated at the time.

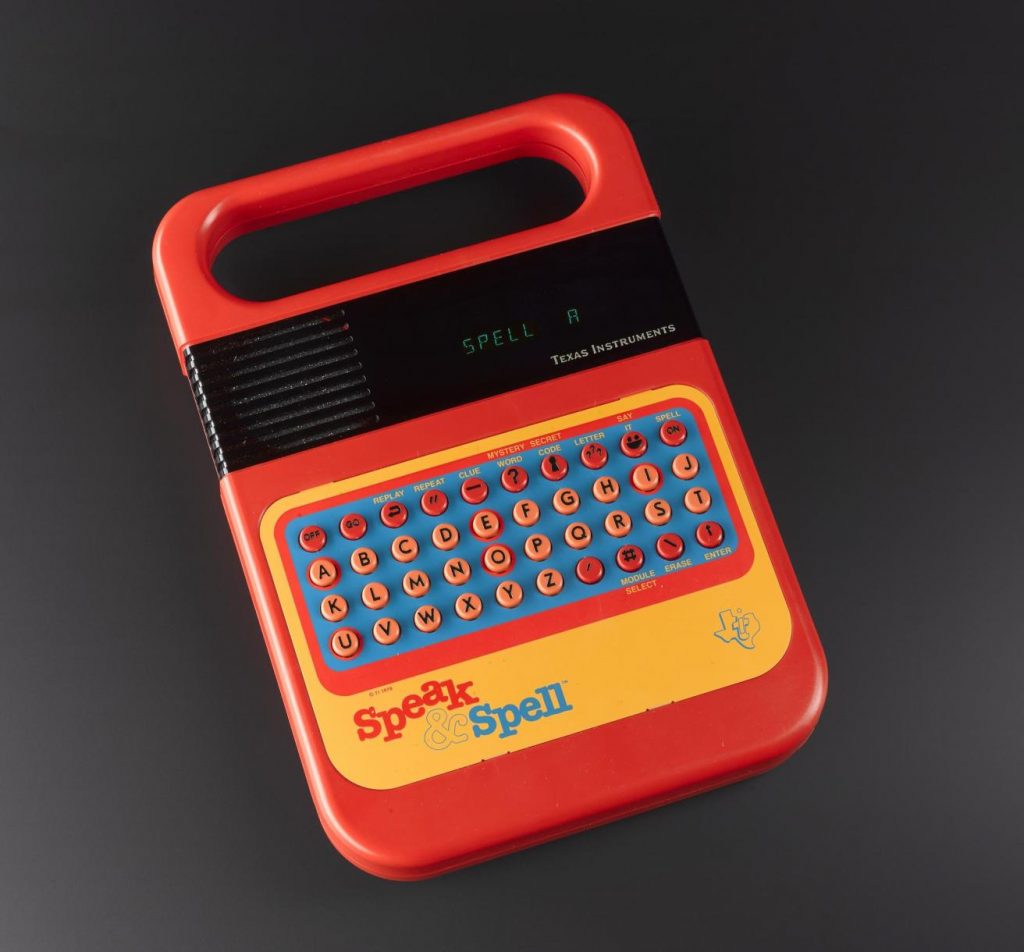

For most people, their first encounter with electronic synthesised voices might have been in a generation of toys developed in the later 1970s. Companies such as Texas Instruments, better known at the time for making electronic calculators, had developed a voice on a chip. The quality was such that its uses were limited. It was good enough however to feature in one of the best-selling toys of 1978, the Speak & Spell.

The advances in electronic synthetic speech enabled by the pioneering work carried out with PAT and the miniaturisation made possible by the development of microchips had a more direct impact on the development of assistive speech devices for people who have lost the ability to speak or were born without speech. One such is engineer Toby Churchill, who demonstrated his text display device on the BBC’s Tomorrow’s World programme in the 1970s. These were the pioneering days for handheld assistive devices, as Toby acknowledged when he made clear:

“Technology will change, users’ needs won’t”.

Synthetic speech has improved hugely since 1984 when the late Professor Stephen Hawking was first kitted out with his electronic voice, called Perfect Paul, which was developed as the default voice on an early telephone voice interaction device.

But those working with assistive electronic voices are still grappling with some of the issues experienced by the PAT scientists; how to create a truly human sounding voice with all the nuances that requires. Still, in Edinburgh the Euan McDonald Centre and the Anne Rowling Regenerative Neurology Clinic, working with the University of Edinburgh Centre for Speech Technology Research, are continuing the quest to make a machine that talks with their Speak: Unique research project.

Similar pioneering work is also being carried out on speech generating devices at the University of Dundee, who estimate:

“a quarter of a million people in the UK alone are unable to speak and are at risk of isolation. They depend on Voice Output Communication Aids (VOCAs or Speech Generating Devices) to compensate for their disability.”

We have come a long way since PAT began the 1958 documentary by saying “Do you understand what I say to you?” The 2018 theme for World Telecommunication Day on 17 May is the potential of Artificial Intelligence. However that potential is realised over the next decades, we can guarantee that the work of the PAT team and those continuing the work in Edinburgh, Dundee and beyond will play a role in its future, as well as making a fundamental difference to the lives of those using assistive speech technologies today.